Top Deep Learning Frameworks in 2025: TensorFlow, PyTorch, or Something New?

If you recently signed up for a Deep Learning Course or you’re thinking about doing so then you would’ve heard the terms TensorFlow, PyTorch and JAX! These aren’t just software packages, they are the building blocks of how AI models are created, trained and deployed. However, as deep learning is moving at breakneck speed, are there even frameworks to speak of in 2025? And is it still a two-horse race between TensorFlow and PyTorch?

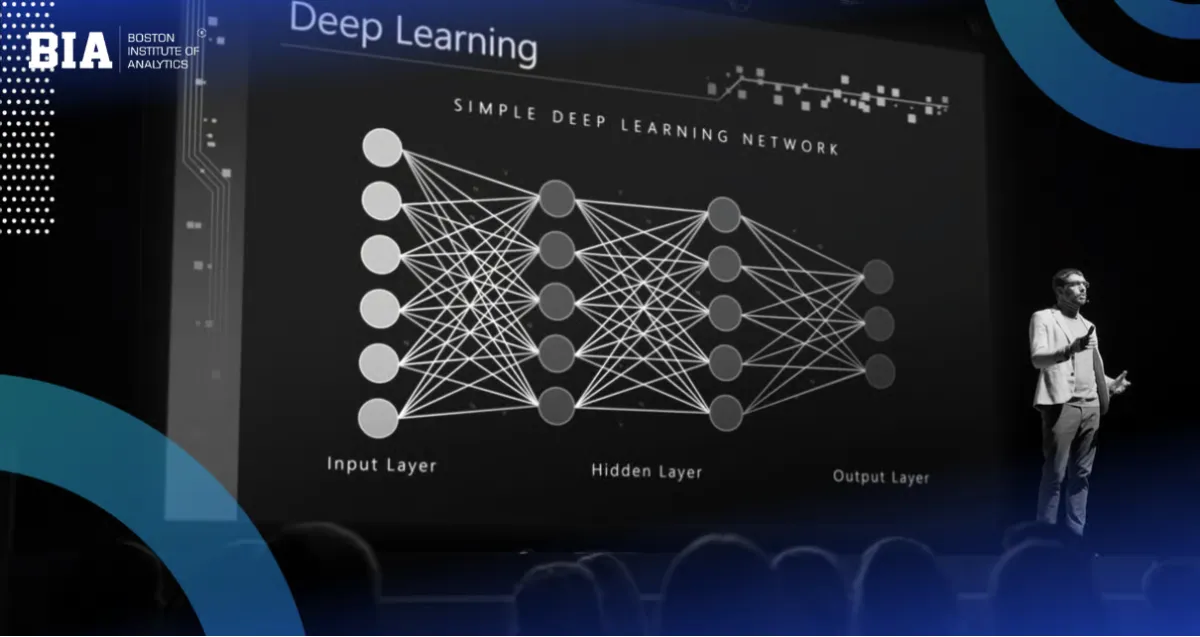

Why Deep Learning Frameworks Matter?

The way we have developed and integrated artificial intelligence (AI) models has changed because of the emergence of deep learning frameworks. They provide the framework necessary for researchers/developers to build, train, and adjust complex models in an efficient and effective manner. Without these particular frameworks, the endeavour of developing deep-learning models would be cumbersome and laborious.

Efficiency and Productivity

Deep learning frameworks are significant for a few reasons, but first, they help automate many of the redundant tasks needed to finalize a model. Frameworks such as TensorFlow, Pytorch, and Keras offer abstractions that reduce the time it takes to build a model. They have pre-built functions for many tasks such as, matrix manipulation, optimization algorithms and gradient calculations. This saves developers plenty of time focused on rollout and innovation rather than model development.

Flexibility and Scalability

Another factor of significance of deep learning frameworks is the provision of flexibility and scalability. When developing AI models that are becoming more complex, frameworks help scale models from one GPU to multiple GPUs or distributed systems. Scalability is vital when developing a model when the dataset is large or training a deep neural network takes an inordinate amount of time.

Community Support and Resources

Another big plus is that there are many large, active communities around these frameworks. The resources developers have available (i.e., tutorials, pre-trained models, and help if they run into trouble) exponentially improve the time taken to complete a project. The fact that many of the frameworks are open-source means that they can continuously be improved upon and experts will share their contributions from all over the world.

TensorFlow in 2025: The Veteran Keeps Evolving

As of 2025, one of the most considered deep learning frameworks, TensorFlow, continues to stretch its legs and remain relevant. Originally released in 2015 from Google (and there have been many updates & improvements since), TensorFlow will still be a widely used framework and maintained for both research and production applications in AI. It is still a powerful environment to build scalable machine learning model build-out.

A Simplified User Experience

By 2025, the new user experience with TensorFlow going from 1.x to 2.x (4.x/) is one the most significant changes to note. In the original release there was simplicity and ease of use when it came to making machine learning models but they forced you to go through all the code before you could get to Keras as the high level API to create models. By 2025, TensorFlow has simplified this process to where both seasoned users will be able to create sophisticated models right away, learners will be able to apply guidance from TensorFlow and build more quickly, and require no experience whatsoever; the process of creating machine learning model can be entirely effortless. Improvements to the user interface, and documentation transparency, has broadened the appeal of TensorFlow considerably in recent times.

Advancements in Performance and Scalability

By 2025 TensorFlow’s performance optimizations had come a long way. The framework can leverage hardware accelerators (such as TPUs and GPUs) more effectively and more efficiently, decreasing training time. Also, TensorFlow’s integration with distributed computing systems has come along, meaning it is much easier to scale models across multiple machines, which is essential for organizations that process and use global datasets with models that require an enormous amount of computing.

Expanded Support for Specialized Models

TensorFlow has broadened its scope to support more AI tasks, including natural language processing (NLP), computer vision, and reinforcement learning (RL). With the rise of specialized neural architectures (for example: transformers) there are now pre-built modules and optimized tools for specific use cases, for example: for NLP TensorFlow provides transformer objects, while for computer vision there are convolutional neural networks (CNN). Likewise, TensorFlow is modular which gives developers better access to tools and solutions to use without having to traditionally develop something from scratch.

A Thriving Ecosystem

By 2025 TensorFlow’s ecosystem had developed tools/models for deployment, monitoring, and optimization – TensorFlow Serving, TensorFlow Lite, TensorFlow.js – I cannot write about these as I have only been introduced to these items. However, things will only get better as the tools matured and have allowed developers to deploy a model on many different platforms either in mobile, through apps, or on web pages. Likewise, the TensorFlow hub has now become a pre-trained model market place, it can’t get any easier to start your project.

PyTorch in 2025: The Community Favorite Still Rules Research

By 2025, PyTorch was a clear leader when it came to AI frameworks in the research and experimentation space. Although starting out known for easy to construct dynamic computation graph as well as its intuitive and user-friendly design, PyTorch has quickly evolved to be the favoured platform for researchers and practitioners. PyTorch’s Pythonic and flexible nature will continue to be the main reason they are “at the forefront” of research.

A Research-First Framework

What makes PyTorch different from other deep learning frameworks is the framework’s focus on research. The platform’s intuitive design allows for easy experimentation and iteration so that researchers could develop novel architecture and test ideas at the same time. Towards the end of 2025, PyTorch had been adopted by academia to near universal levels with the majority of machine learning papers and new ideas emerging from using PyTorch. The framework allowed researchers to conduct AI research with very limited boundaries allowing for advancements in NLP (Natural Language Processing); computer vision; reinforcement learning; etc.

Advances in Performance and Efficiency

While flexible PyTorch/Fairness has become known for, the framework has made tremendous strides in performance optimization. In 2025, with hardware accelerators and software optimization, PyTorch’s speed and scalability really got to be impressive and deliver scalable and fast distributed training capabilities for models at scale, from the small developer to the enterprise level. With the support for GPUs, TPUs, and multi-node clustering, which can be provisioned on-demand via existing cloud solutions, PyTorch has become the default and accepted choice for high-performance AI models.

Integration with the Broader AI Ecosystem

Five years later in 2025, the PyTorch ecosystem has improved dramatically with separate collection of libraries and tools providing additional software when needed. Libraries such as: Torch Vision (for image processing), TorchText (for text processing), and Torch Audio (for sound processing), already mature and optimized for specific jobs. The PyTorch environment is tightly coupled with many very popular toolsets, especially in Natural Language Processing, such as Hugging Face, and many that have been adopted as new toolkits for Neural Networks, such as reinforcement learning toolkits from OpenAI. These can all be combined with existing solutions as well as pulling in many other already trained and performance improved models for retrained research in the PyTorch space.

JAX: The Quiet Contender That’s Now a Power Player

JAX, once an underdog in the deep learning space, has risen to power by 2025. JAX, developed by Google, originally a library for high-performance numerical computing expanded to deep learning in just a few short years to become a common framework for both research and production within AI. Its combination of speed, flexibility, and scalability has positioned to JAX to be a must-have fit, with the development of new and advanced machine learning models, which often require advanced techniques in scientific computing.

Speed and Differentiability: The Core Strengths

What makes JAX fundamentally different from other deep learning frameworks is its differentiable computing focus on high performance. By 2025, JAX’s ability to represent expressive mathematical functions, and perform difficult computations at incredibly high speeds with differentiation, make JAX the standard choice for gradient-based optimization in simulations requiring massive scale, and for complex model configurations requiring a diversity of hyper parameter searches. Using automatic differentiation and building on just-in-time (JIT) compilation JAX provides opportunities for rapid experimentation and for executing models as fast as possible. It’s understandable why JAX became common in research in reinforcement learning, generative models and scientific computing, where execution speed matters just as much as functional accuracy.

Seamless Scalability with Hardware Acceleration

By the year 2025, JAX has developed into a giant when it comes to using hardware accelerators (and TPUs). Not only is the integration with these classes of accelerators seamless, but it also supports distributed computing out of the box. This level of scalability has made it a perfect framework for training large-scale models in parallel across many nodes (distributed cluster capability), whether that be from a single machine or a distributed cluster where runs become more compute-intensive!

True scalability is inherently part of the design of JAX, furthermore JAX does not sacrifice performance as workloads increase with the more sophisticated computations. Moreover, JAX is very tightly integrated with XLA (Accelerated Linear Algebra), and therefore the maintainability of optimizing the code across dozens of hardware backends facilitates it for faster execution without the worry of requiring optimization by the user or studying which backend to run using.

Other Frameworks Worth Knowing in 2025

In 2025, TensorFlow, PyTorch, and JAX remain as the most popular frameworks to develop deep learning algorithms, however there are many others that continue to gain traction but are not as broadly used or adopted as the top three. Many of these frameworks have innovative features, unique use cases, and other distinct advantages that may make them noteworthy to know in the context of specific use cases. To only highlight a few frameworks that are making a positive impact in furthering movement in deep learning in the terms of performance for edge devices, as well as supporting unique applications such as time-series, are all aspects that every programmer and researcher with a background in artificial intelligence should be aware of in 2025.

MXNet: Lightweight and Flexible for Production

Developed by the Apache Software Foundation, MXNet is an example of a framework that has continued to maintain traction and innovate as of 2025. Lightweight, flexible and easy to manipulate for building models, MXNet is a strong choice for both research and production environments. While there are some differences between them, MXNet was built primarily for its ease of scalability of large models and job execution on cloud based systems or distributions across systems. MXNet exhibits flexibility in that it is nothing more than a hybrid of a symbolic and imperative programming environment. On one hand, it allows a developer to experiment with models rapidly, while at the same time preserving control over the performance of the framework.

Caffe2: The Lightweight Performer for Mobile and Embedded Systems

While the Caffe2 framework is commonly known among artificial intelligence enthusiasts, the inherent design intent of Caffe2 for its performance and low-latency inference for mobile devices and the rapid integration leveraged through ONNX (Open Neural Network Exchange) would cause Caffe2 to be overlooked in the shadow of the emergence of PyTorch. However, considering the interoperability agreement between the ONNX and Caffe2, there are various understandings Caffe2’s value of working through ONNX trained in popular models in any of the other above-described frameworks as a direct deployment for mobile devices and production systems.

TensorFlow Lite: Optimized for Edge Devices and Mobile

While TensorFlow is by far the most known framework for machine learning, TensorFlow Lite has become the most used solution to deploy deep learning models on mobile and edge devices respectively. In 2025, TensorFlow Lite certainly improved performance with a smaller memory signature, lower latency, and wider hardware support. In 2025, TensorFlow Lite supported both Android and iOS platforms and optimized for smartphones, wearables, and IoT platforms.

Hugging Face: The NLP Powerhouse

In 2025, Hugging Face represents leading edge Natural Language Processing (NLP). Hugging Face contains the Transformers library, providing wide support for pre-trained models for text generation, translation, question and answering, etc. Hugging Face also provides state-of-the-art models like GPT-4, BERT, and T5, and embraces the idea of developer accessibility, as well as tools to fine-tune models on a custom dataset.

ONNX: The Interoperability Champion

Although it is not a framework in and of itself, the Open Neural Network Exchange (ONNX) format has become an important part of the AI ecosystem of 2025. ONNX provides a way to transfer models from one deep learning framework to another, including PyTorch to TensorFlow or Caffe2 and vice versa. In a framework-agnostic manner, ONNX has allowed developers to take advantage of the strengths of various tools and deploy these models to various platforms.

DeepLearning4J: The Java-Based Framework

For Java developers, DeepLearning4J is still a huge opportunity in 2025. DeepLearning4J (DL4J) is a deep learning framework that offers a comprehensive collection of tools for developing, training, and deploying machine learning models that are designed for enterprise AI solutions. One of the strongest aspects of DL4J is that it provides a natural fit for the Java Virtual Machine (JVM) ecosystem, making it a very attractive option for organizations that may already be Java heavy in their technology stacks.

Final Thoughts

In 2025, the landscape of deep learning frameworks is more volatile than ever. TensorFlow is no longer a lumbering framework, it has been streamlined and is production ready. PyTorch has not been beat for speed and flexibility. JAX has gone from a relatively unknown research tool to powering the next-gen AI systems behind the scenes.

But here is the deal: you can’t be pigeonholed into one framework anymore. Things happen too quickly in the AI space. The ideal Deep Learning Course will provide you with the mind-set to adapt, tools to play with, and the ability to ship models regardless of underlying framework used.

So, whether you are just starting out or upskilling as a working professional, choose a course that gets off the bench. The best way to be competent in deep learning is not by reading about frameworks it is by using them.

Leave a Reply