The Digital Symphony: How AI is Orchestrating the Future of Music Production

The soundscape of modern music is undergoing a profound transformation. From the independent bedroom producer to the seasoned studio engineer, the tools of creation are evolving at an unprecedented pace. Central to this revolution is Artificial Intelligence, a force reshaping how we conceive, produce, and consume music. This shift isn’t just about automation; it’s about empowerment, democratizing access to sophisticated sound design, and driving the entire music industry towards a more digital-centric future.

The Dawn of Algorithmic Composition: A New Era

The integration of AI into music production software is not merely a trend; it’s a fundamental paradigm shift. Historically, music creation demanded a blend of innate talent, years of training, and often, expensive equipment. AI is dismantling these barriers, offering intuitive interfaces and intelligent algorithms that assist, inspire, and even generate musical ideas. This new era sees technology not as a replacement for human creativity, but as a powerful collaborator, expanding the horizons of what’s possible.

One particularly captivating area within this AI-driven evolution is the emergence of specialized tools that cater to specific stylistic demands. Think about the pervasive popularity of “slowed and reverb” tracks. This subgenre, characterized by its ethereal, often melancholic sound, has captured the attention of millions across various platforms. The ability to effortlessly transform existing tracks into these atmospheric renditions has become a coveted skill, and AI is stepping in to make it universally accessible.

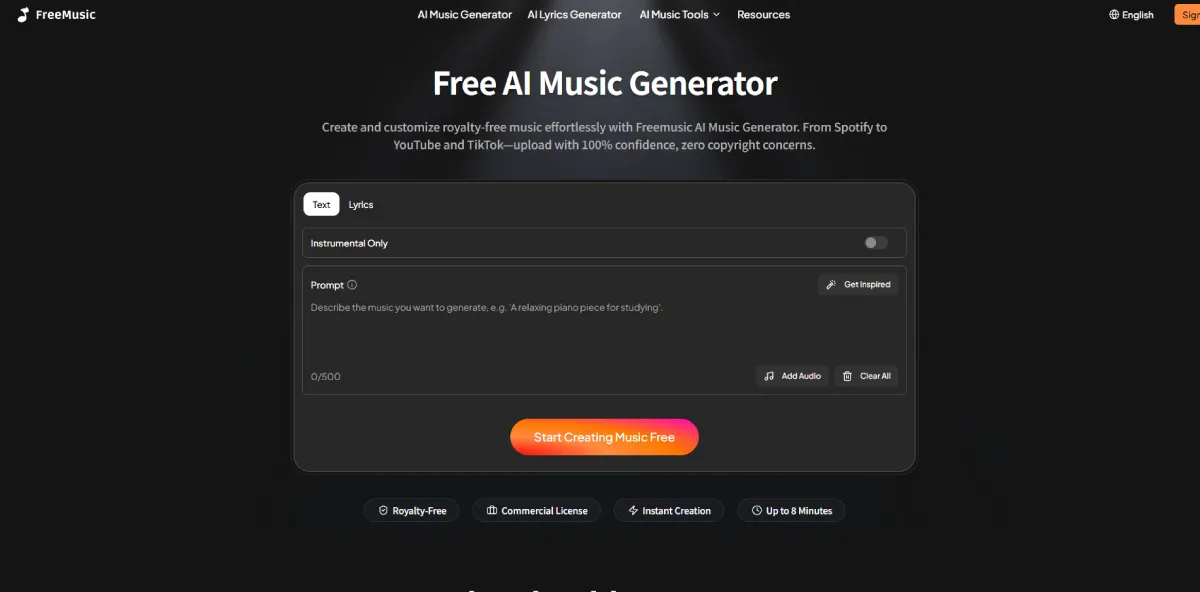

Freemusic AI: Mastering the Atmospheric Echo

Amidst this exciting landscape, platforms like Freemusic AI are carving out a significant niche. Freemusic AI, as its name suggests, leverages artificial intelligence to provide users with innovative music production capabilities. While the platform offers a range of features, its proficiency in generating slowed and reverb effects stands out as a particularly compelling application of AI in music. This isn’t just about pitching down a track and adding a standard reverb; it’s about intelligent processing that maintains musicality and emotional resonance.

The essence of a great slowed and reverb track lies in its ability to evoke a specific mood. This requires more than just technical application; it demands an understanding of musical phrasing, harmonic context, and rhythmic integrity. Freemusic AI aims to bridge this gap, allowing creators to apply these complex effects with a streamlined workflow, even without extensive audio engineering expertise. The platform exemplifies how AI can translate nuanced audio manipulation into an accessible and efficient process, enabling a broader spectrum of creators to achieve professional-sounding results.

Exploring the Core Capabilities of Freemusic AI

Freemusic AI’s suite of features is designed to empower both novice and experienced producers. At its heart lies the Slowed and Reverb Generator, a testament to the platform’s focus on contemporary sound design. This specific function allows users to upload an audio file and, with a few clicks, apply the characteristic slowed tempo and expansive reverb. The AI algorithms work to intelligently adjust parameters, aiming for a cohesive and pleasing sonic outcome rather than a disjointed effect.

Beyond this signature feature, Freemusic AI often incorporates other supportive tools. These might include basic editing functionalities like trimming or looping audio, or perhaps options for adjusting the intensity of the slowed or reverb effects. The goal is to provide a comprehensive, albeit focused, toolkit for transforming audio. This approach ensures that users can not only generate the desired effect but also fine-tune it to fit their specific creative vision. The simplicity of the interface often belies the sophisticated AI working in the background, making complex audio processing feel intuitive.

Engaging with the Platform: A User’s Journey

Utilizing Freemusic AI typically involves a straightforward process, designed for efficiency. First, a user would navigate to the platform and likely be presented with an option to upload an audio file. This could be anything from a vocal recording to an instrumental track or even a full song. Once the file is uploaded, the interface would then guide the user to select the desired effect – in this case, the slowed and reverb transformation.

The AI then takes over, processing the audio according to its algorithms. This usually happens in a relatively short amount of time, depending on the file size and the complexity of the processing. Finally, the transformed track is presented to the user, often with options for previewing and downloading. This seamless workflow is crucial for creators who want to experiment quickly and integrate new sounds into their projects without getting bogged down by technical intricacies. The accessibility of such tools empowers rapid prototyping and iteration, fostering a more agile creative process.

Assessing the Merits and Limitations

Like any technological advancement, AI music production tools, and Freemusic AI specifically, come with their own set of advantages and potential drawbacks. On the positive side, the sheer accessibility these platforms offer is revolutionary. They democratize complex audio effects, allowing anyone with an internet connection to experiment with sophisticated sound design. This fosters creativity and lowers the barrier to entry for aspiring producers. Furthermore, the speed at which these effects can be applied is a significant time-saver, freeing up creators to focus on other aspects of their music. The consistency that AI can bring to these effects is also a benefit, ensuring a certain quality standard.

However, limitations do exist. While AI can emulate certain styles and effects, it often lacks the nuanced human touch that experienced audio engineers bring to their craft. The artistry of manual mixing and mastering, the subtle decisions made based on emotional context, are still areas where human expertise remains paramount. There’s also the potential for stylistic homogenization if too many creators rely solely on algorithmic solutions without adding their unique creative stamp. Thus, while Freemusic AI offers powerful tools, it’s best viewed as an augmentation to, rather than a replacement for, human ingenuity.

Who Benefits Most from AI-Powered Music Tools?

The target audience for platforms like Freemusic AI is broad, encompassing various types of music enthusiasts and professionals. Independent artists and bedroom producers are perhaps the most direct beneficiaries. They often operate on limited budgets and resources, and AI tools provide them with professional-grade effects without the need for expensive software or extensive training. This allows them to compete more effectively in the digital music market.

Content creators and YouTubers also find immense value in such platforms. They frequently need to create unique audio backdrops or modify existing music for their videos and podcasts. The quick generation of slowed and reverb tracks can instantly add a desired atmosphere to their content. Furthermore, DJs and remix artists can use these tools for quick experiments, transforming tracks for live sets or creating unique edits for their mixes. The ability to rapidly prototype new sounds is invaluable for those constantly innovating in performance and production. The Slowed and Reverb Generator within Freemusic AI becomes a crucial tool for these diverse user groups, enabling them to produce engaging audio content efficiently.

AI’s Role in a Digitally Driven Music Industry

The advent of AI music production software is not just about creating new sounds; it’s about fundamentally altering the economic and distribution models of the music industry. By empowering individual creators, AI is fueling the rise of a more decentralized, digitally-driven market. Artists no longer need major labels to access high-quality production tools; they can create, mix, and even master their tracks using AI-assisted software. This shift lowers overheads and allows artists to retain more control over their intellectual property and revenue streams.

Moreover, AI is helping to identify trends and preferences, informing artists and marketers about what resonates with audiences. This data-driven approach, combined with accessible production tools, allows for more agile and responsive content creation. The music market is becoming increasingly personalized, with AI playing a role in both generating tailored content and recommending it to listeners. Freemusic AI, by focusing on popular styles like slowed and reverb, directly taps into existing market demands, demonstrating how AI can align production capabilities with consumer tastes in a highly efficient manner. This digital transformation is fostering a more dynamic and competitive landscape.

Reflecting on the Evolution of Sound

The journey of music production has always been intertwined with technological innovation. From the invention of the phonograph to the advent of digital audio workstations, each leap forward has reshaped how we create and experience music. AI represents the latest, and arguably one of the most significant, of these leaps. It’s not simply a new instrument or effect; it’s an intelligent assistant that learns, adapts, and empowers.

Freemusic AI, with its dedicated Slowed and Reverb Generator, stands as a prime example of how specialized AI tools are catering to the evolving tastes of a global audience. It’s a testament to the idea that sophisticated production can be made accessible, driving creativity and fostering a new generation of artists. As AI continues to evolve, we can anticipate even more intuitive, powerful, and transformative tools emerging, further blurring the lines between human intuition and algorithmic precision, ultimately enriching the vibrant tapestry of the digital music landscape.

Leave a Reply