AI Lip Sync Guide: Turn Photos to Video

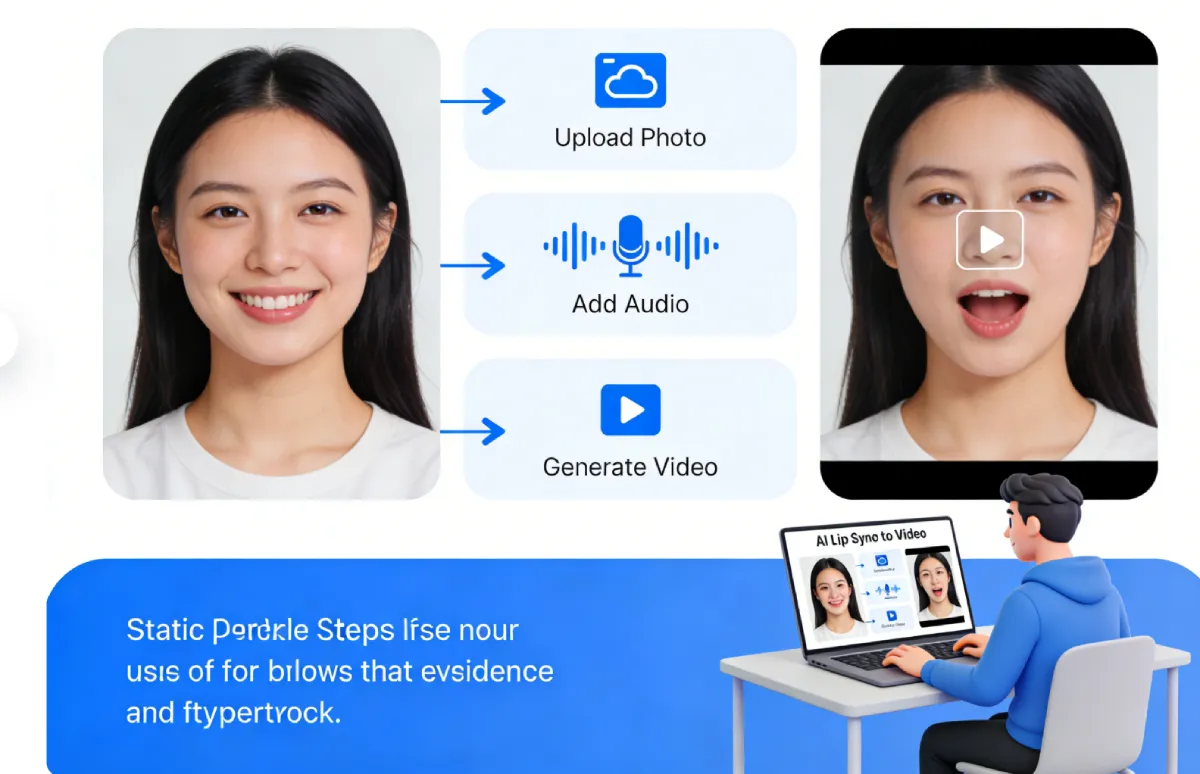

We have entered an era where visual content dominates the digital landscape, yet static imagery often fails to capture the full attention of a modern audience. For decades, the ability to animate a face and make it speak was reserved for high-budget Hollywood studios using motion capture suits and complex CGI. However, the origin of today’s revolution lies in the rapid advancement of neural networks and machine learning. We no longer just look at photos; we want to interact with them. This shift has given rise to lip sync ai technology, a groundbreaking innovation that bridges the gap between still photography and dynamic video storytelling. Whether you are a marketer looking to personalize outreach or a creator wanting to bring a historical figure to life, the barrier to entry has vanished. By utilizing advanced lip sync ai algorithms, anyone can now take a simple portrait and imbue it with voice, emotion, and movement, effectively turning a frozen moment into a compelling, talking avatar.

Why Choose an AI Lip Sync Generator?

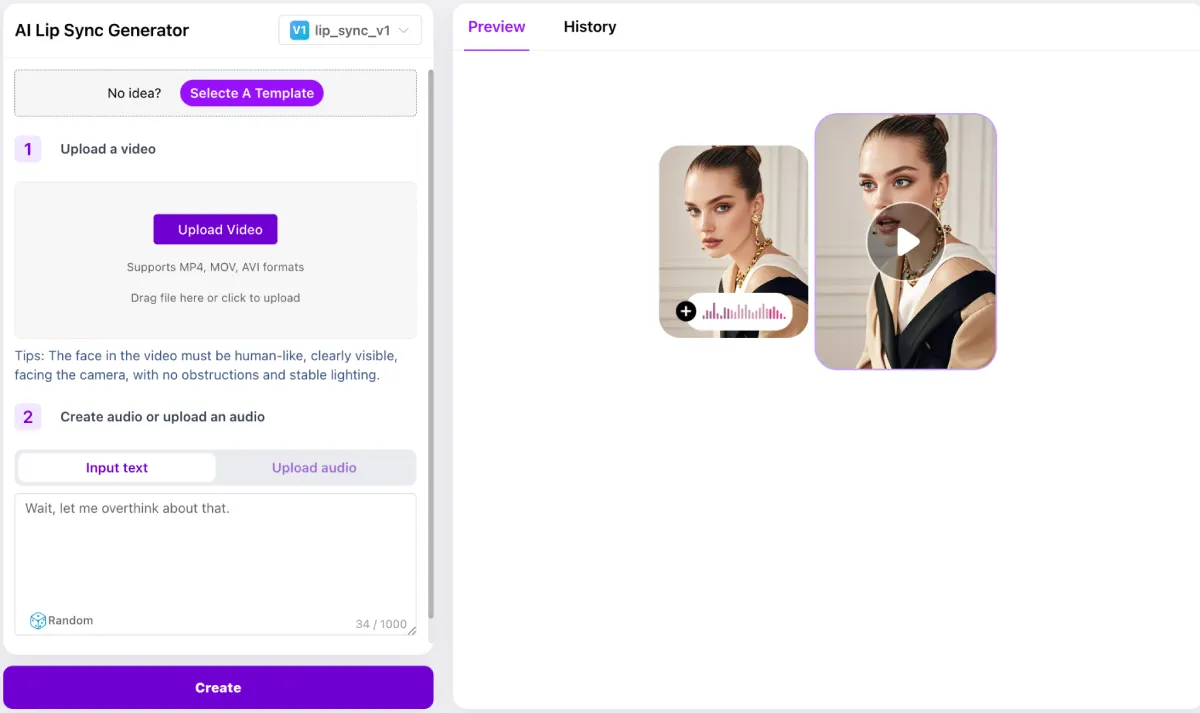

The transition from static images to talking avatars is not just a novelty; it represents a fundamental shift in how we produce content. Utilizing an AI lip sync generator offers distinct advantages that traditional video production methods simply cannot match. From efficiency to scalability, these tools are reshaping the creator economy.

Unmatched Speed and Production Efficiency

In the traditional animation pipeline, synchronizing lip movements to audio is a painstaking process. An animator would typically have to manually keyframe mouth shapes (visemes) to match specific phonemes in the audio track. A thirty-second clip could take days to perfect.

AI lip sync generators eliminate this bottleneck entirely. By analyzing the audio waveform and the facial structure of the uploaded photo simultaneously, the AI predicts the necessary mouth movements in real-time or near real-time. What used to take a week of manual labor can now be accomplished in minutes. This speed allows content creators to react to trends instantly. For example, a news outlet could generate a talking avatar news anchor to deliver a breaking update mere moments after the script is written, without needing a camera crew or a human presenter on standby.

Cost-Effective Video Scalability

Video production is notoriously expensive. It involves cameras, lighting, microphones, actors, and post-production crews. For small businesses or independent creators, these costs are often prohibitive.

AI tools democratize this process. With a single photo and a text-to-speech script (or a recorded voiceover), you can create professional-grade video content. There is no need to hire voice actors if you use high-quality AI voices, and there is certainly no need to rent a studio. Furthermore, this approach allows for massive scalability. A company can create thousands of personalized videos for individual customers by simply changing the script, while the AI handles the lip synchronization automatically. This level of personalized marketing was previously impossible due to budget constraints but is now a standard capability of these generators.

Overcoming the “Uncanny Valley” with Precision

Early attempts at automated lip-syncing were often jarring, falling deep into the “uncanny valley”—where the face looks human but moves in a disturbing, robotic way. This often did more harm than good for engagement.

Modern AI lip sync generators have largely solved this issue through Deep Learning. They don’t just move the mouth; they analyze the correlation between speech and the entire face. When we speak, our jaw moves, our cheeks slightly lift, and our eyes may squint or widen. High-quality AI generators map these micro-expressions onto the static photo. The result is a fluid, organic animation where the head bobs naturally and the eyes blink, creating a sense of presence that feels authentic rather than artificial. This precision is crucial for maintaining viewer retention and trust.

Top Use Cases for Talking Avatars

The utility of transforming a photo into a talking avatar extends far beyond simple entertainment. Various industries are adopting this technology to solve complex communication problems, enhance engagement, and personalize user experiences. Here is how you can leverage Lip Sync AI across different scenarios.

Engaging Educational Content and E-Learning

The education sector faces a constant battle for student attention, especially in remote learning environments. Text-heavy slides and disembodied voiceovers often lead to cognitive disengagement.

How to use it: Educators can create a diverse range of AI avatars to act as virtual tutors. For a history lesson, a teacher could use a photo of Abraham Lincoln or Albert Einstein and use an AI lip sync tool to make them deliver the lecture. This brings history to life in a way that a textbook never could. Furthermore, for language learning, having an avatar that demonstrates proper mouth movements (even if simulated) can help students understand pronunciation better than audio alone. The visual cue of a speaking face creates a connection, mimicking a one-on-one tutoring session.

Dynamic Social Media Marketing

On platforms like TikTok, Instagram Reels, and YouTube Shorts, the algorithm favors dynamic, fast-paced video content. Faceless brands or influencers who prefer anonymity often struggle to build a personal connection with their audience.

How to use it: Marketers can create a consistent “brand mascot” or a virtual influencer using a generated avatar. Instead of filming a spokesperson every day, the social media team can simply write a script regarding a product launch or a trending topic, upload the brand avatar, and generate a video in minutes. This ensures visual consistency across all posts. Additionally, it allows for A/B testing different avatars to see which demographic responds better to which “face,” optimizing engagement rates without the cost of hiring multiple actors.

Personalized Customer Support

Chatbots have become a standard in customer service, but they often feel cold and impersonal. Text-based interactions lack the empathy and reassurance that a human face provides.

How to use it: Companies can integrate talking avatars into their helpdesk systems. When a user asks a common question, instead of receiving a wall of text, a friendly avatar appears on the screen to explain the solution verbally. This is particularly useful for complex troubleshooting where tone of voice matters. By using lip sync AI, these responses can be generated dynamically based on the user’s name and specific problem, making the customer feel seen and valued, thereby increasing customer satisfaction scores (CSAT).

Bringing History and Ancestors to Life

Genealogy is a booming interest for many people. We often stare at old, sepia-toned photographs of great-grandparents, wondering what they were like.

How to use it: This is one of the most emotional use cases for the technology. Individuals can upload scanned photos of deceased relatives. By pairing the photo with an old audio recording (if available) or a voice clone that mimics their accent, families can create “living memories.” Museums and heritage sites are also using this to animate historical figures at exhibits, allowing visitors to “converse” with the past. It transforms passive observation into an active, emotional experience.

Corporate Training and Onboarding

Employee onboarding can be a dry process involving hours of reading handbooks or watching outdated compliance videos. Updating these videos usually requires a reshoot, which is expensive and time-consuming.

How to use it: HR departments can utilize talking avatars to deliver training modules. If a policy changes, the HR team doesn’t need to re-film the training manager; they simply update the text script, and the AI generates the new video with the correct lip sync. This ensures that training materials are always up-to-date and consistent globally. It also allows for easy localization; the same avatar can deliver the training in Spanish, French, or Mandarin to international teams without dubbing issues, as the lips will re-sync to the new language.

Interactive Gaming and RPG Storytelling

Indie game developers and Dungeon Masters (for tabletop games like D&D) often have grand visions for storytelling but lack the budget for full 3D animation rigs.

How to use it: AI lip sync tools allow developers to create immersive dialogue scenes using 2D character portraits. Instead of static text boxes, Non-Player Characters (NPCs) can actually speak to the player. For tabletop enthusiasts, a Game Master can generate a video message from the “villain” to send to the players between sessions, heightening the immersion and drama of the campaign. This adds a layer of production value that was previously accessible only to AAA game studios.

Key Features of Modern Lip Sync AI Tools

When evaluating which AI lip sync generator to use, it is essential to understand the specific features that differentiate a basic tool from a professional-grade platform. The following features are what you should look for to ensure high-quality output.

Realistic Facial Micro-Expressions

As mentioned earlier, the key to believability lies beyond the mouth. The best tools on the market today feature “full-face animation.”

This feature ensures that the AI analyzes the emotional tone of the audio. If the voice sounds angry, the eyebrows should furrow. If the voice is happy, the eyes should crinkle at the corners. Advanced algorithms also handle head movements—tilting, nodding, and swaying—to prevent the “stiff neck” syndrome often seen in older software. Some tools even allow you to control the intensity of these expressions, giving you directorial control over the avatar’s performance.

Multi-Language Voice Support and Translation

A static image has no nationality, and AI lip sync tools exploit this by offering robust multi-language support.

Top-tier generators are integrated with advanced Text-to-Speech (TTS) engines that support dozens, sometimes hundreds, of languages and accents. The critical feature here is that the lip sync adapts to the specific phonemes of the target language. The mouth shapes required to speak Japanese are fundamentally different from those required for English or German. A high-quality generator adjusts the facial geometry accordingly. This allows a creator to take a single photo of a CEO and have them deliver a message in five different languages to global branches, with perfect lip synchronization for each one.

Custom Voice Uploads and Cloning

While TTS voices are convenient, they can sometimes lack the unique nuance of a specific human brand.

The ability to upload your own audio file is a standard but vital feature. However, the cutting-edge feature is Voice Cloning. This allows users to record a minute of their own voice (or an actor’s voice) to train the AI. Once the voice is cloned, you can type text, and the avatar will speak it using that specific voice replica. This creates the ultimate personalized video: your face (or your avatar’s face) speaking with your unique vocal signature, all generated from text without you ever hitting “record” on a camera.

High-Definition Rendering and Background Integration

The quality of the input photo matters, but the quality of the output video matters more.

Leading AI lip sync generators now support 1080p and even 4K rendering. They use “super-resolution” or upscaling AI to ensure that the animated face remains sharp and does not become pixelated during movement. Additionally, advanced tools offer background removal and replacement. You can upload a photo, have the AI remove the original background, and place the talking avatar in a newsroom, a classroom, or a fantasy landscape. This compositing capability turns a simple portrait tool into a full-fledged video production suite.

Conclusion: Embrace the Future of Digital Storytelling

The technology to turn a photo into a talking avatar is no longer the stuff of science fiction; it is a readily available tool that is reshaping how we communicate, teach, and remember. By leveraging a powerful lip sync ai generator, creators and businesses can bypass the traditional barriers of video production, saving time and money while unlocking new realms of creativity. Whether you are using lip sync ai free trials to experiment with memes or deploying enterprise-grade lipsync ai solutions for global marketing campaigns, the potential is limitless. As these tools continue to evolve, the line between static art and living media will continue to blur, offering us all a voice in the digital world. Now is the time to explore these tools and let your images speak.

Leave a Reply